In part 2, we discussed a tangent-space implementation of the “interior mapping” technique, as well as the use of texture atlases for room interiors. In this post, we’ll briefly cover a quick and easy shadow approximation to add realism and depth to our rooms.

Hard Shadows

We have a cool shader which renders “rooms” inside of a building, but something is clearly missing. Our rooms aren’t effected by exterior light! While the current implementation looks great for night scenes where the lighting within the room can be baked into the unlit textures, it really leaves something to be desired when rendering a building in direct sunlight. In an ideal world, the windows into our rooms would cast soft shadows, which move across the floor as the angle of the sun changes.

Luckily, this effect is actually quite easy to achieve! Recall how we implemented the ray-box intersection in part 2. Each room is represented by a unit cube in tangent-space. The view ray is intersected with the cube, and the point of intersection is used to determine a coordinate in our room interior texture. As a byproduct of this calculation, the point of intersection in room-space is already known! We also currently represent windows using the alpha channel of the exterior texture. We can simply reuse this alpha channel as a “shadow mask”. Areas where the exterior is opaque are considered fully in shadow, since no light would enter the room through the solid wall. Areas where the exterior is transparent would be fully effected by light entering the room. If we can determine a sample coordinate, we can simply sample the exterior alpha channel to determine whether an interior fragment should be lit, or in shadow!

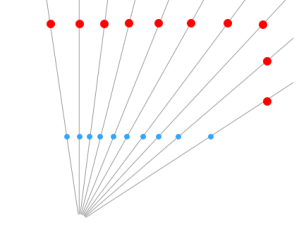

So, the task at hand: How do we determine the sample coordinate for our shadow mask? It’s actually trivially simple. If we cast the light ray backwards from the point of intersection between the view ray and the room volume, we can determine the point of intersection on the exterior wall, and use that position to sample our shadow texture!

Our existing effect is computed in tangent space. Because of this, all calculations are identical everywhere on the surface of the building. If we transform the incoming light direction into tangent space, any light shining into the room will always be more or less along the Z+ axis. Additionally, the room is axis-aligned, so the ray-plane intersection of the light ray and exterior wall can be simplified dramatically.

// This whole problem can be easily solved in 2D // Determine the origin of the shadow ray. Since // everything is axis-aligned, This is just the // XY coordinate of the earlier ray-box intersection. float2 sOri = roomPos.xy; // Determine a 2D ray direction. This is the // "XY per unit Z" of the light ray float2 sDir = (-IN.tLightVec.xy / IN.tLightVec.z) * _RoomSize.z; // Lastly, determine our shadow sample position. Since // our sDir is unit-length along the Z axis, we can // simply multiply by the depth of the fragment to // determine the 2D offset of the final shadow coord! float2 sPos = sOri + sDir * roomPos.z;

That’s about it! We can now scale the shadow coordinate to match the exterior wall texture, and boom! We have shadows.

Soft Shadows

We have hard shadows up and running, and everything is looking great. What we’d really like is to have soft shadows. Typically, these are rendered with some sort of filtering, a blur, or a fancy technique like penumbral wedges. That’s not going to work here. We’re trying to reduce the expense of rendering interior details. We’re not using real geometry, so we can’t rely on any traditional techniques either. What we need to do is blur to our shadows, without actually performing a multi-sampled blur.

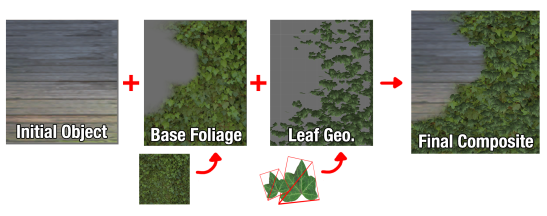

Like all good optimizations, we’ll start with an assumption. Our windows are a binary mask. They’re either fully transmissive, or fully opaque. In most cases this is how the effect will be used anyway, so the extra control isn’t a big loss. Now, with that out of the way, we can use the alpha channel of our exterior texture as something else!

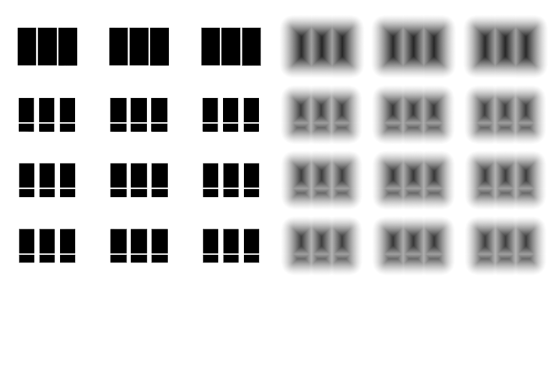

Signed Distance Fields

Signed Distance Fields have been around for a very long time, and are often used to render crisp edges for low-resolution decals, as suggested in “Improved Alpha-Tested Magnification for Vector Textures and Special Effects”. Rather than storing the shadow mask itself in the alpha channel, we can store a new map where the alpha value represents the distance from the shadow mask’s borders.

Now, a single sample returns not just whether a point is in shadow, but the distance to the edge of a shadow! If we want our shadows to have soft edges, we can switch from a binary threshold to a range of “shadow intensity”, still using only a single sample!

The smoothstep function is a perfect fit for our shadow sampling, remapping a range to 0-1, with some nice easing. We can also take the depth of the fragment within the room into account to emulate the softer shadows you see at a distance from a light source. Simply specify a shadow range based on the Z coordinate of the room point, and we’re finished!

Putting it all Together!

All together, our final shadow code looks like this.

#if defined(INTERIOR_USE_SHADOWS) // Cast a ray backwards, from the point in the room opposite // the direction of the light. Here, we're doing it in 2D, // since the room is in unit-space. float2 sOri = roomPos.xy; float2 sDir = (-IN.tLightVec.xy / IN.tLightVec.z) * _RoomSize.z; float2 sPos = sOri + sDir * roomPos.z; // Now, calculate shadow UVs. This is remapping from the // light ray's point of intersection on the near wall to the // exterior map. float2 shadowUV = saturate(sPos) * _RoomSize.xy; shadowUV *= _Workaround_MainTex_ST.xy + _Workaround_MainTex_ST.zw; // Finally, sample the shadow SDF, and simulate soft shadows // with a smooth threshold. fixed shadowDist = tex2D(_ShadowTex, shadowUV).a; fixed shadowThreshold = saturate(0.5 + _ShadowSoftness * (-roomPos.z * _RoomSize.z)); float shadow = smoothstep(0.5, shadowThreshold, shadowDist); // Make sure we don't illuminate rooms facing opposite the light. shadow = lerp(shadow, 1, step(0, IN.tLightVec.z)); // Finally, modify the output albedo with the shadow constant. iAlbedo.rgb = iAlbedo.rgb * lerp(1, _ShadowWeight, shadow); #endif

https://gfycat.com/DangerousRectangularDungbeetle

And that’s all there is to it! Surprisingly simple, and wonderfully cheap to compute!

There’s still room for improvement. At the moment the shadow approximation supports only a single directional light source. This is fine for many applications, but may not work for games where the player is in control of a moving light source. Additionally, this directional light source is configured as a shader parameter, and isn’t pulled from the Unity rendering pipeline, so additional scripts will be necessary to ensure it stays in sync.

For deferred pipelines, it may be possible to use a multi-pass approach, and write the interior geometry directly into the G-buffers, allowing for fully accurate lighting, but shadows will still suffer the same concessions.

Still, I’m quite happy with the effect. Using relatively little math, it is definitely possible to achieve a great interior effect for cheap!